Bento Technical Design

Overview

Bento's infrastructure is composed of a few core open source projects:

- Docker

- PostgreSQL

- Redis

- MinIO

- Grafana (optional for monitoring)

Bento Components

Bento's components are built on top of this core infrastructure:

- API

- TaskDB

- CPU (executor) Agent

- GPU (prover) Agent

- Aux Agent

These components are the basis for Bento and therefore, they are critical for its operation.

Technical Design

Bento's design philosophy is centered around TaskDB. TaskDB is a database schema in PostgreSQL that acts as a central communications hub, scheduler, and queue for the entire Bento system.

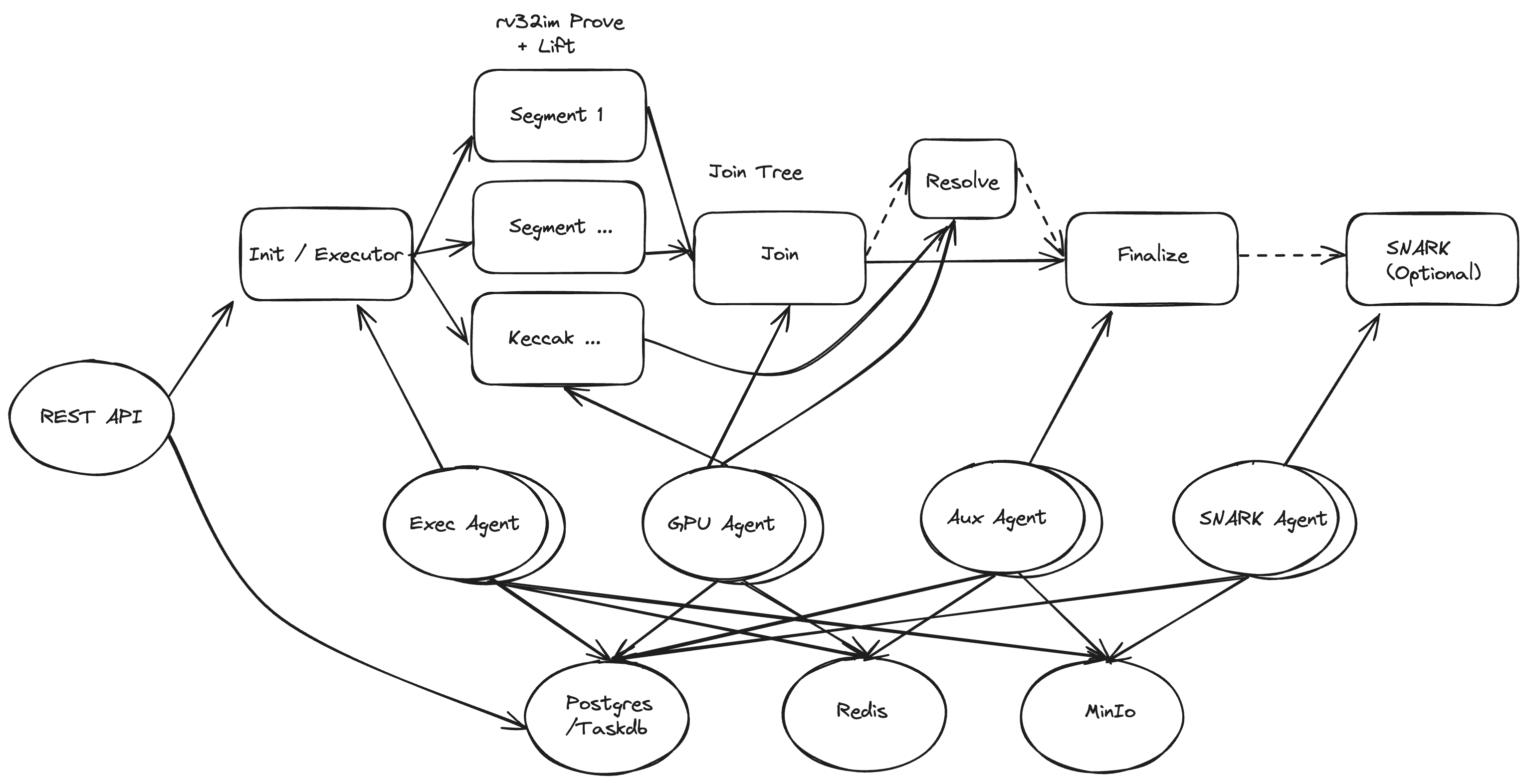

The following diagram is a visual representation of the proving workflow:

Bento has the application containers:

- REST API

- Agents (of different work types exec/gpu/aux/snark)

As demonstrated above, Bento breaks down tasks into these major actions:

- Init/Setup (executor) - this action generates continuations or segments to be proven and places them on Redis.

- Prove + lift (GPU/CPU agent) - proves a segment on CPU/GPU and lifts the result to Redis.

- Join - takes two lifted proofs and joins them together into one proof.

- Resolve - produces a final join and verifies all the unverified claims, effectively completing any composition tasks.

- Finalize - Uploads the final proof to minio.

- SNARK - Convert a STARK proof into a SNARK proof using rapidsnark.

Redis

In order to share intermediate files (such as segments) between workers, Redis is used as a fast intermediary. Bento writes to Redis for fast cross machine file access and provides a high bandwidth backbone for sharing data between nodes and workers.

TaskDB

TaskDB is the center of how Bento schedules and prioritizes work. It provides the ability to create a job which will contain many tasks, each with different actions in a stream of work. This stream is ordered by priority and dependencies. TaskDB's core job is to correctly emit work to agents via long polling in the right order and priority. As segments stream out of the executor, TaskDB delegates the work plan such that GPU nodes can start proving before the executor completes.

Prioritizing Work Streams

TaskDB also has the ability to prioritize specific work streams using two separate modes:

- Priority multiplier mode allows for individual users and task types to be schedules ahead of other users.

- Dedicated resources mode allows for a stream's user to get priority access to N workers on that stream. For example, if

user1has a 10 GPU stream then that work will always get priority over the normal pool of users that have dedicated count of 0. But onceuser1has 10 concurrent GPU tasks, any additional work is scheduled alongside the rest of the priority pool of user work.

The Agent

Bento agents are long polling daemons that opt-in to specific actions. An agent can be configured to act as a:

- Executor

- GPU worker

- CPU worker

- SNARK agent

This allows Bento to run on diverse hardware that can specialize in tasks that need specific hardware:

- Executor - needs low core count but very high single thread core clock CPU performance

- GPU - needs a GPU device to run GPU accelerated proving

- CPU (optional) - run prove+lift on a CPU instead of a GPU, not advised for performance reasons

- SNARK - Needs a high CPU thread count and core speed node

The agent polls for work, runs the work, monitors for failures and reports status back to TaskDB.

Further Information

More on the Executor

The executor (init) task is the first process run within a STARK proving workflow and iteratively generates the continuations work plan of prove+lift, join, resolve and finalize.

Internally, each "user" of Bento gets their own stream for each type of work. So user1 would have their own stream for CPU, GPU, Aux, and SNARK work types. Each stream has settings for priority multiplier and dedicated resources described above.

More on the GPU

The GPU agent does the heavy lifting of proving itself. Work is broken into power of 2 segments sizes (128K, 256K, 500K, 1M, 2M, 4M cycles). The GPU's amount of VRAM will dictate which power of 2 to use as the SEGMENT_SIZE.

As a general rule of thumb, for segment sizes of:

- 1 million cycles requires 9~10GB of GPU VRAM

- 2 million cycles requires 17~18GB of GPU VRAM

- 4 million cycles requires 32~34GB of GPU VRAM

The performance optimization guide has a whole section on segment size benchmarking.

More on SNARK

This agent will convert a STARK proof into a SNARK proof using rapidsnark. Performance is dependent on core clocks AND thread counts. Having a lot of cores but a very low core clock speed can adversely affect performance for the SNARK process.

REST API

The REST API provides a external interface to start / stop / monitor jobs and tasks within TaskDB. Bento is intended to be a drop in replacement for Bonsai, including being partially Bonsai API compatible. The Bonsai API docs provide a good reference for the Bento REST API.